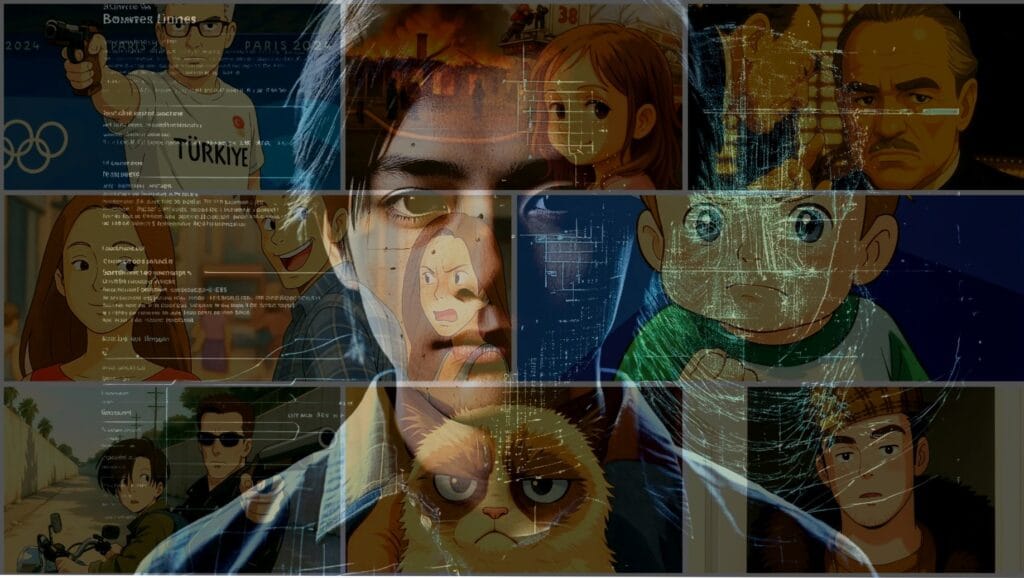

For the past week, the internet has exploded with Ghibli-style selfies. Across Instagram, X, and TikTok, timelines are being overrun with watercolor avatars, dreamy filters, and AI animations that turn your face into a character seemingly lifted from Spirited Away or My Neighbor Totoro. It feels harmless, creative, even nostalgic. But behind the surface of this innocent trend lies a deeper, more complicated reality that few seem to be considering: where does all this biometric data actually go?

Let’s not beat around the bush: you are feeding your face to an AI.

When Art Becomes Data

The moment you upload your photo into these Ghibli-style AI generators, you’re doing more than just playing with a creative tool. You are submitting high-resolution, well-lit, front-facing images perfect for facial recognition training. Unlike scraped images from the web, which can be low-quality or taken from odd angles, selfies handed willingly to AI apps are gold-standard data for any model learning to mimic or replicate human faces.

This isn’t speculation. Most users assume they are simply using a fun transformation tool, but in reality, they may be granting companies unrestricted access to their biometric data.

That’s a chilling reality to consider. While the avatars may vanish quickly, the data you give up could stick around much longer.

Consent vs Convenience

One of the more disturbing aspects of this phenomenon is how quickly people opt in, giving consent under vague terms buried in small-print legalese. Broadly worded consent forms often don’t specify how long your photos will be retained, whether they will be shared, or even if they’ll be used to train other models. And let’s be honest no one is reading the terms before uploading their selfie.

Many of these companies aren’t transparent about data storage practices. Some retain photos indefinitely. Others say they delete them, but don’t provide audit trails or verification. Worse, in jurisdictions with weak AI regulations, users might be surrendering rights to their facial data altogether.

And the kicker? Once your biometric data is out there, it’s almost impossible to get it back.

The Biometric Gold Rush

Facial data is biometric data. It’s not just a picture; it’s a digital key to your identity. Your face can unlock your phone, verify your banking app, or pass an airport security scan. That same face, uploaded to an AI tool for fun, can just as easily be used to train deepfake algorithms or forge synthetic identities.

Deepfake technology is advancing at an alarming pace. AI-generated faces are already being exploited for financial fraud and impersonation.

These avatars may be cute, but behind them is a serious vulnerability. There’s already a surge in AI-driven identity theft. Imagine someone using your stylized, high-res face to bypass KYC checks or gain access to systems that rely on visual confirmation.

And yes this is already happening.

Global Legal Grey Zones

So, what about the law? Are companies even allowed to keep your face?

Well, it depends.

In regions like the EU (under GDPR), California (CCPA), or India (DPDP Act), companies must gain explicit, purpose-specific consent before storing or reusing biometric data. But in much of the world, AI data governance remains immature or completely absent.

Even in countries with solid laws, enforcement is a different beast. Companies often exploit jurisdictional loopholes, host their servers in less regulated areas, or offer international services that sidestep local protections.

Worse still, the concept of “synthetic identities” AI-generated faces that aren’t real but look real enough to pass as human blurs legal boundaries even further. Current laws protect real biometric data. But how do we legislate a face that doesn’t exist, yet was generated based on the real facial data of millions?

India: The Epicenter of AI Enthusiasm

India is currently the fastest growing market for ChatGPT. With over 130 million Indian users contributing to the 700 million AI-generated images created within a week, the country is embracing generative AI with open arms.

While this represents a surge in creativity and innovation, it also exposes a massive population to privacy and environmental risks. The upcoming Digital India Act may address some of these concerns, but for now, users are largely unprotected.

very crazy first week for images in chatgpt – over 130M users have generated 700M+ (!) images since last tuesday

— Brad Lightcap (@bradlightcap) April 3, 2025

India is now our fastest growing chatgpt market 💪🇮🇳

the range of visual creativity has been extremely inspiring

we appreciate your patience as we try to serve…

Are we trading security for aesthetics? Is that a deal worth making?

Ghibli-style Aesthetics, Hidden Costs

While the ethical and privacy dimensions of this trend are alarming, there’s another hidden cost that rarely enters the conversation: energy and environmental impact.

Artificial Intelligence is computationally expensive. Generating a single 100-word email with GPT-4 consumes about 0.14 kWh, enough electricity to power 14 LED light bulbs for an hour. That’s just text. Image generation is far more resource-intensive.

According to a recent study from the Washington Post and the University of California, generating images via models like DALL-E or Midjourney can consume enough electricity daily to power the Empire State Building for over a year. Let that sink in.

Water usage? Just as bad. AI data centers use freshwater for cooling, often in quantities that rival public utilities. ChatGPT alone is estimated to use around 39.16 million gallons of water daily. That’s equivalent to the entire population of Taiwan flushing a toilet simultaneously.

Even OpenAI CEO Sam Altman admits the infrastructure strain is real. He’s publicly stated that server demand is causing outages, delaying new feature releases, and straining power grids.

At What Cost?

The big players know there’s a problem. OpenAI is scrambling to build out capacity, including hardware R&D projects, proprietary chip development, and multi-billion-dollar investments led by SoftBank. But while they chase growth, the public is still in the dark.

Altman calls the explosion in usage “amazing” but simultaneously warns of “adverse consequences” due to capacity issues. Translation? This boom isn’t sustainable.

Every Ghibli-style avatar generated on ChatGPT contributes to this load. It’s not just your data at stake it’s your power grid, your water supply, and ultimately, your planet.

Critical Questions Every User Should Ask

Before you hit that ‘generate’ button, consider the following:

- Is the app clear about how it uses your images?

- Does it delete them after use, or are they stored? If stored, for how long?

- Can you opt out or delete your data entirely?

- Does the company comply with GDPR, CCPA, or India’s DPDP Act?

- What are their data security practices?

If these questions aren’t answered transparently, you may be handing over your identity to a black box.

The Price of Play

In this digital age, your data is the currency. And your face? That’s one of the most valuable assets you have. It can be used to unlock devices, verify transactions, or fool a security system. And yet, we give it away for free for fun.

This isn’t just about Ghibli avatars. It’s about a pattern of normalized data surrender. We’ve reached a point where trading biometric information for convenience is not just accepted, it’s encouraged.

We like to believe that tech makes life easier, more creative, more connected. But with every viral trend, we edge closer to a future where privacy is not lost, but handed over willingly, one click at a time.

It’s time to stop treating this as just another social trend. This is a wake-up call. The next big AI craze may already be in your camera roll.

So ask yourself: are you ready to trade your biometric identity for a pretty picture?