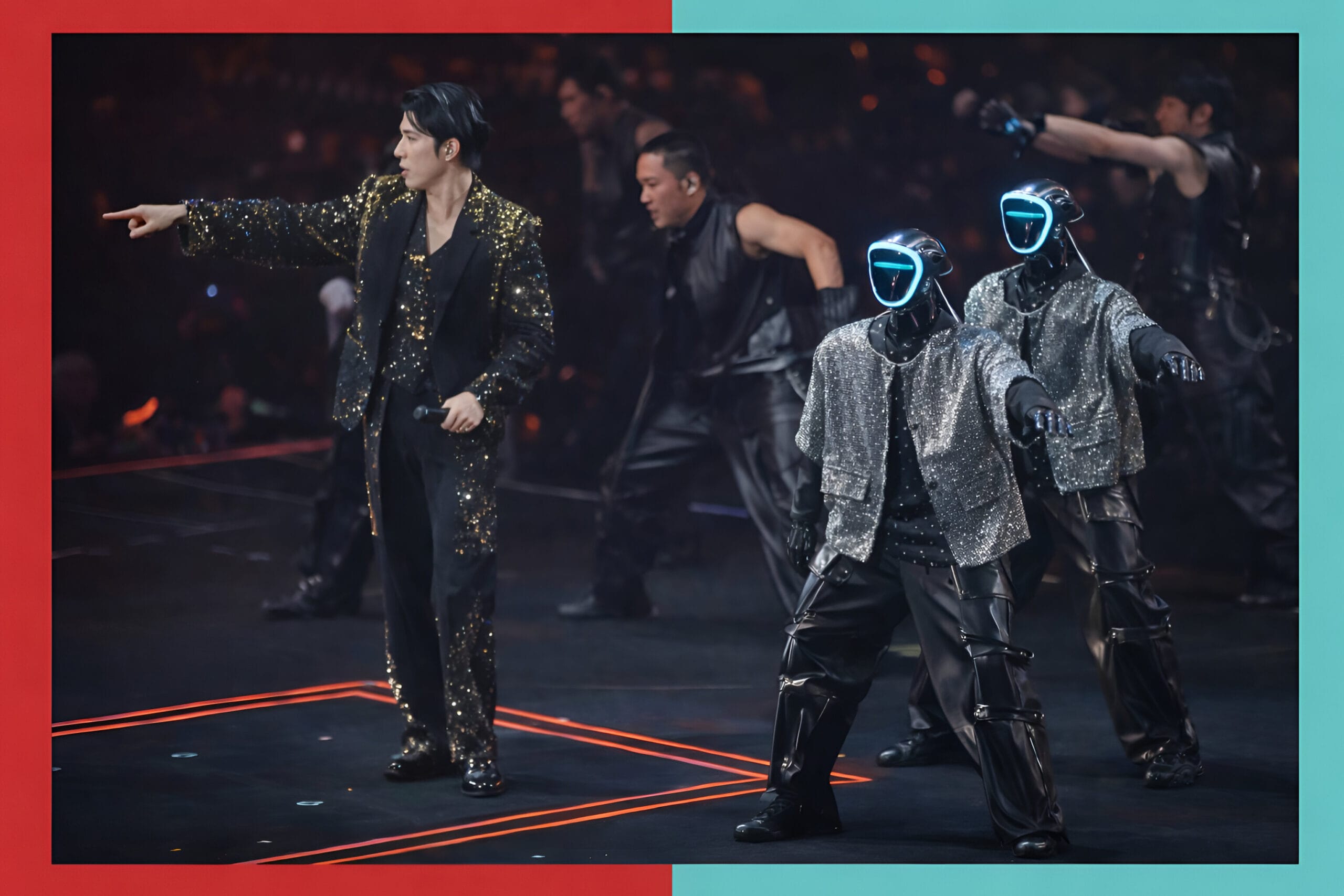

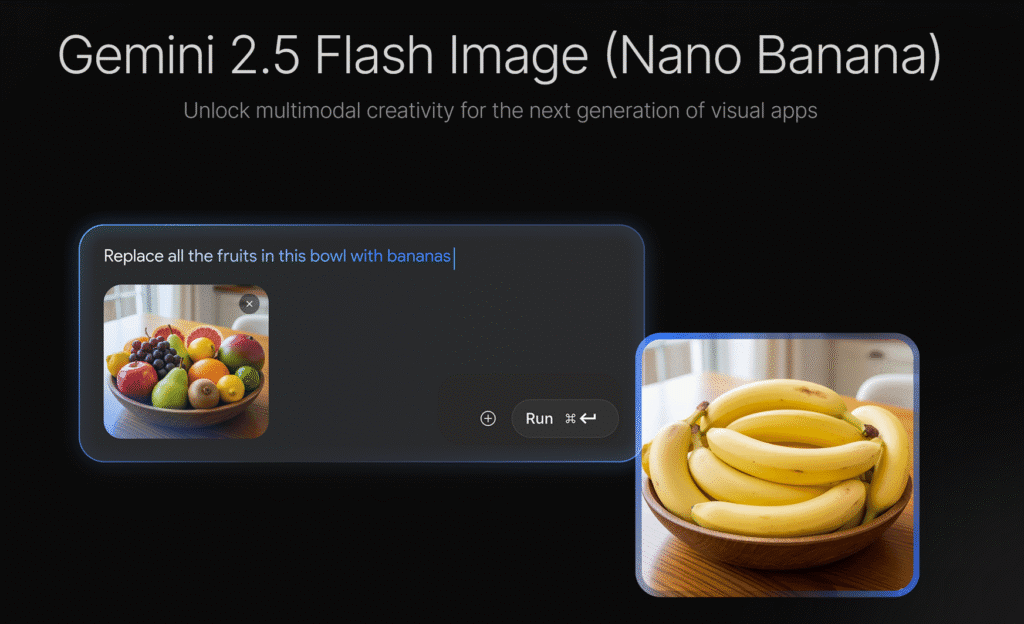

So, here’s the deal. You’ve probably seen that “Nano Banana Saree” trend all over your feeds, people feeding their selfies to Google’s Gemini AI to get back some vintage, 90s-style portrait. It looks fun, it’s free, and everyone’s doing it.

On the surface, it’s a simple image generation task. But a recent incident exposed a serious vulnerability in the system, and it’s a reminder that your fun new toy may be a security risk you haven’t thought about.

The trend works on a basic principle. User provides input (a selfie). AI processes input based on a prompt (“retro vintage, saree, 90s aesthetic”). AI provides output (the stylized portrait). Simple, right? But one user’s experience uncovered a critical bug in this seemingly straightforward process.

The Bug Report: Unreported Data Input

The user, Jhalak Bhawani, ran her selfie through the system. The output was a clean, polished portrait. But upon a closer look, she found a detail that shouldn’t have been there: a mole on her left hand. A physical trait she has in real life, but which was not visible in the source photo she uploaded.

Reported Behavior: AI generates a new image based on a provided photo and text prompt.

Expected Behavior: The generated image is based solely on the data provided in the photo and prompt.

Actual Behavior: The generated image includes a detail (a mole) not present in the provided photo, but which exists on the user’s body.

Status: CRITICAL. This indicates that the system is pulling data from a source outside of the specified input.

The user immediately flagged this. The community response was split, but both sides missed the main point. The first group called it a “hallucination,” a common term for AI fabricating data. This is a naive assessment. While AI models can hallucinate, a hallucination that perfectly matches a real, physical feature not present in the input is not a random occurrence. It’s a data-dependent event. The second group, equally misguided, dismissed it as a simple coincidence, essentially saying, “It’s normal, man.” It is not. The system should not have access to that information.

The System Analysis: How This Happened

There are two primary hypotheses for this exploit, and neither of them is good.

Hypothesis 1: The Cross-Application Data Pull.

The most likely scenario is a security lapse in data compartmentalization. Gemini is a Google product. Google Photos, Google Drive, Google Search—they are all part of a single, interconnected ecosystem. It is technically plausible that the Gemini AI, in a bid to “improve” the quality of its output or create a more “realistic” representation, accessed other data sources associated with the user’s Google account. This is a significant breach of implied privacy. Even if a user hasn’t explicitly linked their Google Photos to Gemini, a back-end data pipeline might exist for training or processing purposes. The user may not have given explicit, granular consent for their full Google data profile to be used for this single image edit. This is a critical failure in the consent-driven security model.

Hypothesis 2: The Training Data Echo.

A less likely, but still concerning, possibility is that the mole was not pulled in real-time but was part of the AI’s vast training data. If the model had previously been trained on other public or scraped images of the user from social media (e.g., Instagram, Facebook), it could have learned to associate that physical trait with her. While the model is not supposed to retain personally identifiable information, the ability to generate specific, real-life features from a very limited input is a terrifying sign of data leakage from the training set.

Both hypotheses lead to the same conclusion: the user’s data was compromised in a way that was not expected, consented to, or transparent.

The Security Gaps: A Broken Promise

The response from the tech industry to these events is consistently inadequate.

Their solution? Watermarks. Google’s SynthID and similar tools are marketed as safeguards to identify AI-generated images. This is a joke. As security experts have pointed out, watermarking is not a solution, but a PR move.

- It’s not a deterrent: A watermark does nothing to prevent the malicious use of a tool.

- It’s easily removed: A savvy actor can strip metadata and watermarks in seconds.

- It’s not user-facing: Most users cannot even verify if a watermark is present or legitimate.

- It does not address the data source: The watermark is applied after the generation. It says nothing about where the AI got the data to begin with.

The bottom line is that these are not bugs; they are features. The business model of these free-to-use AI tools is based on a value exchange: you get a free service, and the company gets your data to further train their models. This is a system built on a flawed premise of user consent where no one reads the fine print.

The Post-Mortem: What We Learned

The Nano Banana Saree incident is not an isolated event. It is a microcosm of the larger, systemic issues in the AI space. Here’s the final report:

- Rule #1: If it’s free, you’re the product. Your data is the currency.. Your face, your data, your preferences—they are all being used to train the next version of the model.

- Rule #2: Consent Is an Illusion. “Informed consent” is a meaningless term when no one, not even the developers, fully knows how these systems use your data.

- Rule #3: Assume Breach. When dealing with any consumer-facing AI tool, assume your data is not secure and that it is being used in ways you did not intend.

The “Nano Banana Saree” may have been a fleeting trend, but the mole wasn’t a fluke. It was a clear signal that in this system, privacy isn’t a feature it’s a bug.