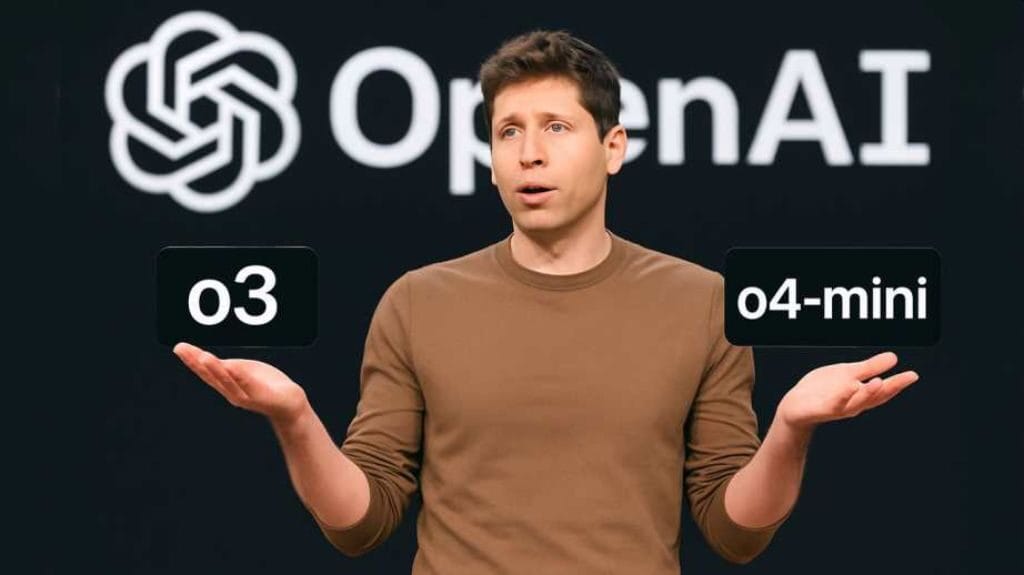

In the ongoing debate around AI’s environmental footprint, OpenAI CEO Sam Altman made a surprising claim this week. In a blog post titled “The Gentle Singularity,” Altman revealed that an average ChatGPT query reportedly uses about 0.000085 gallons of water — roughly one-fifteenth of a teaspoon.

Altman dropped this stat while addressing growing concerns about AI’s energy and water usage. He also mentioned that each ChatGPT query consumes 0.34 watt-hours of electricity, equivalent to what an oven uses in a little over a second or what a high-efficiency lightbulb burns through in a couple of minutes. As AI systems like ChatGPT continue scaling globally, environmentalists have been raising alarms over how these models quietly drain precious resources, especially in drought-prone regions.

However, Altman didn’t disclose how this water-use figure was calculated or what variables were included. With no methodology or context, experts and skeptics alike are understandably wary. This isn’t the first time AI’s environmental costs have been called into question. Last year, The Washington Post collaborated with researchers and concluded that generating a 100-word email using GPT-4 consumes enough water to fill an entire bottle.

Context is key — water consumption per AI query depends heavily on datacenter cooling systems and their geographic location. Facilities in hotter, drier regions require more water for cooling than those in milder climates. Without those specifics, Altman’s teaspoon figure feels more like damage control than data transparency.

Interestingly, Altman predicts that over time, the “cost of intelligence” will drop to match the cost of electricity itself. While that sounds appealing, it conveniently sidesteps current environmental costs that critics argue are already too high. Until OpenAI backs these numbers with verifiable data, it’s tough to know whether we’re sipping teaspoons — or pouring gallons.