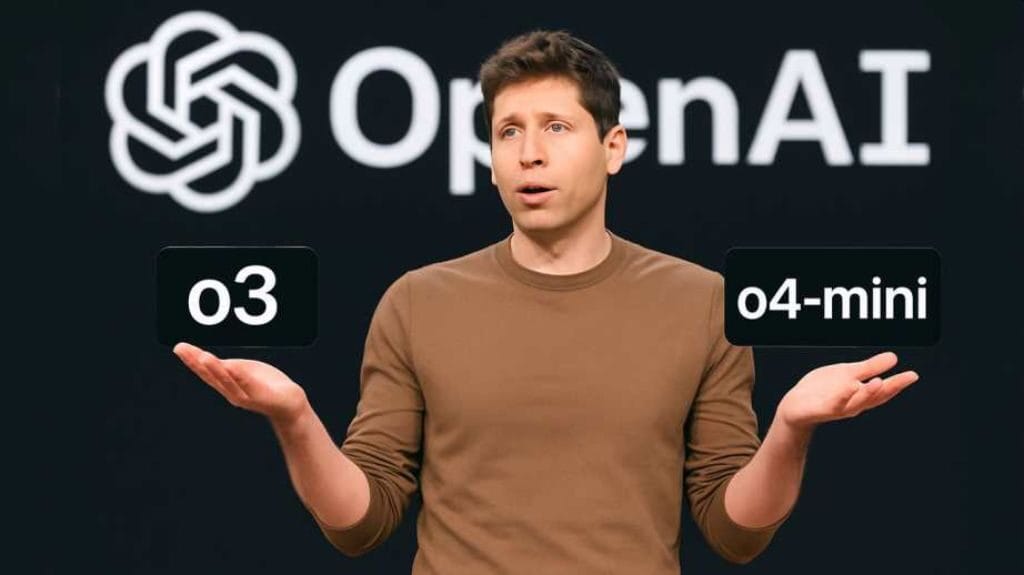

OpenAI’s latest reasoning AI models, o3 and o4-mini, are making headlines for the wrong reasons they hallucinate more than their predecessors. Despite improved performance in coding and math, internal OpenAI benchmarks show o3 hallucinated in 33% of factual queries about people, while o4-mini reached a staggering 48%. That’s double the rate of older models like o1 and o3-mini.

Worse yet, OpenAI admits it doesn’t fully understand why. Their report states “more research is needed” to explain why scaling up reasoning models has worsened hallucination rates. Independent testers at Transluce confirmed o3 invents actions it can’t perform, like claiming to run external code. Experts warn this poses risks for use cases where accuracy is non-negotiable like law, medicine, and enterprise software.

As AI models pivot toward reasoning over brute-force data scaling, the balance between creativity and reliability is proving fragile. OpenAI insists improvements are ongoing, but for now, hallucinations remain an unresolved AI flaw.