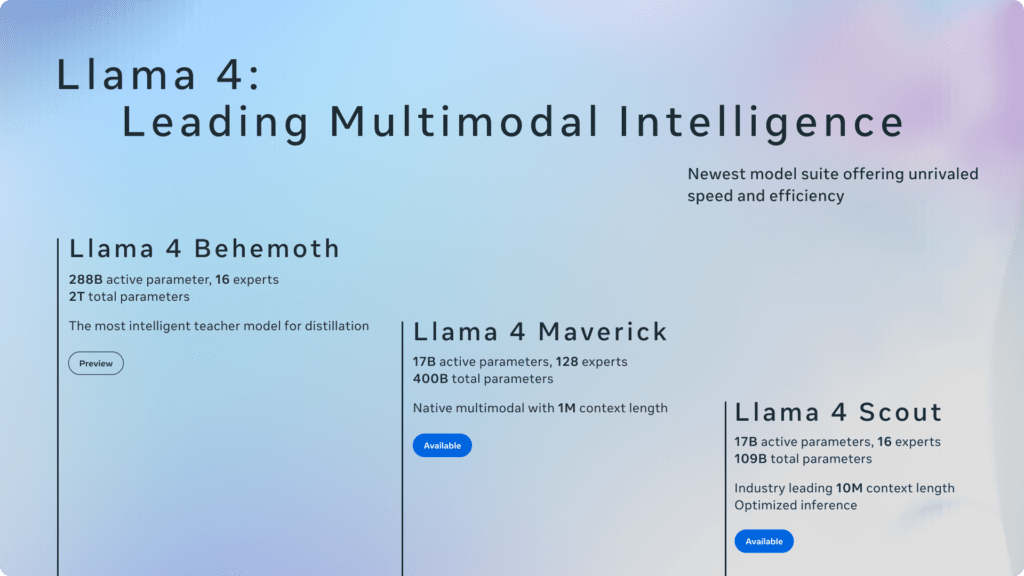

Meta has dropped a new lineup of open-weight Llama 4 AI models—Scout, Maverick, and the in-progress Behemoth—all built on Mixture of Experts (MoE) architecture and natively multimodal. These non-reasoning models deliver top-tier performance with efficient parameter usage.

The Llama 4 Scout features 109B total parameters (17B active) and supports an incredible 10 million token context window. Meta claims it outperforms Gemma 3, Mistral 3.1, and Gemini 2.0 Flash Lite.

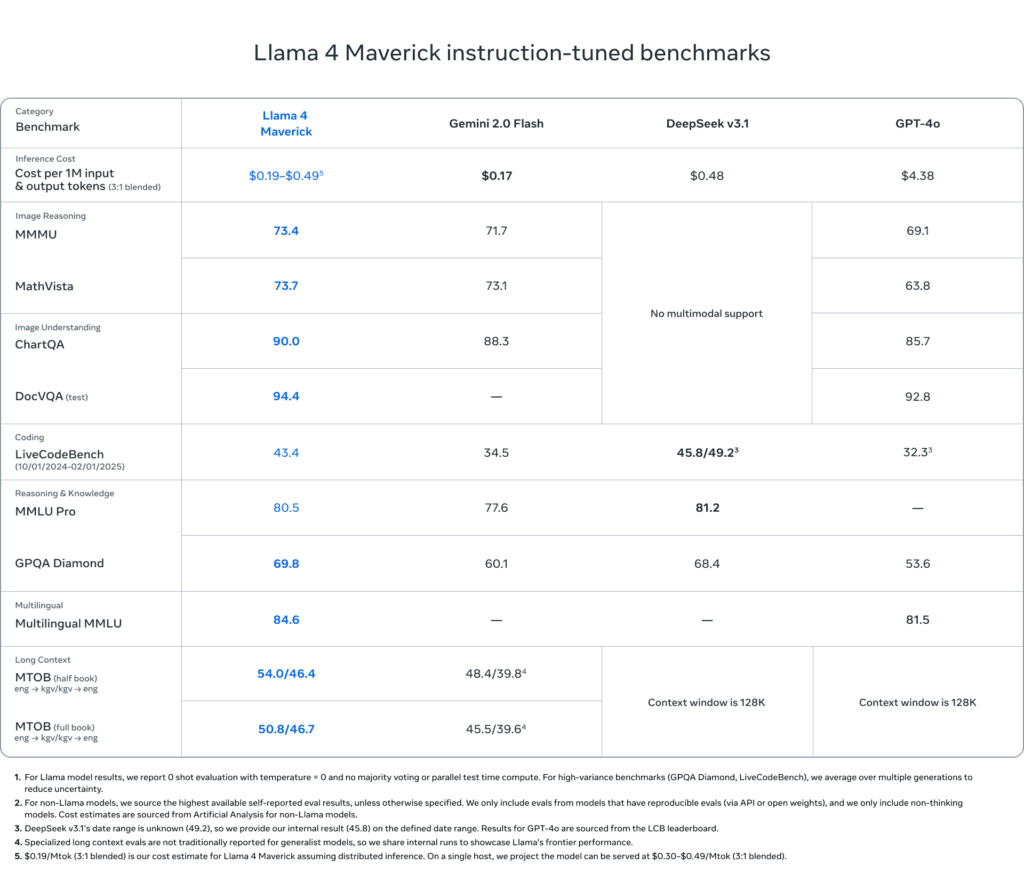

Llama 4 Maverick, with 400B total parameters and 128 experts (still just 17B active), clocks an ELO score of 1,417 on LMArena—ranking second only to Gemini 2.5 Pro and beating out GPT-4o, Grok 3, and Claude 3. Its performance on reasoning and coding rivals DeepSeek V3, all while using fewer active parameters.

The largest model, Llama 4 Behemoth, is still training. It boasts 2 trillion parameters with 288B active across 16 experts, and early STEM benchmarks suggest it may surpass GPT-4.5 and Claude 3.7 Sonnet.

Llama 4 is now rolling out via Meta AI on WhatsApp, Instagram, Messenger, and the Meta AI site in 40 countries though multimodal features are US-only for now.