Are you looking for a final-year project that stands out and helps solve a real-world problem? In this article, we’ll walk you through building a Fake News Detection System using Python, step-by-step. Perfect for engineering students interested in AI, machine learning, and natural language processing (NLP).

So lets get started with this – it is going to be a straight forward project and you can right away work on this with the code below.

🧠 What Is Fake News Detection?

Fake news detection is the process of identifying misleading or false information published online, especially via news articles and social media. Using machine learning models, we can analyze the text and classify whether the news is real or fake.

🚀 What You’ll Learn

- Preprocessing text data using NLP techniques

- Building a classification model using Scikit-Learn

- Using TF-IDF Vectorization

- Deploying your model with Flask and testing it via a web form

- Bonus: Tips to present this project in your viva or demo

🛠️ Tools & Technologies

- Python 3.8+

- Pandas, Numpy

- Scikit-Learn

- Flask

- Jupyter Notebook

- Dataset: Kaggle Fake News Dataset

📁 Step 1: Load & Explore the Dataset

import pandas as pd

# Load data into a DataFrame

df = pd.read_csv('Fake.csv')

print(df.head())

This dataset contains news articles with labels such as ‘FAKE’ and ‘REAL’. We’ll merge the title and text columns into a single content column for better analysis:

df['content'] = df['title'] + ' ' + df['text']

df = df[['content', 'label']]

df['label'] = df['label'].map({'FAKE': 0, 'REAL': 1})

🧹 Step 2: Preprocess the Text

Text cleaning is crucial in NLP. We’ll use regular expressions to remove unwanted characters, convert text to lowercase, remove stopwords, and apply stemming. The regular expressions means that the whole text will be sanitized and made into readable text formats and used to process – if you will want a detailed guide on the regular expressions and their uses on python, feel free to comment and we will be right on it.

import re

from nltk.corpus import stopwords

from nltk.stem import PorterStemmer

stop_words = set(stopwords.words('english'))

stemmer = PorterStemmer()

def clean_text(text):

text = re.sub('[^a-zA-Z]', ' ', text) # Remove non-alphabet characters

text = text.lower().split() # Convert to lowercase and tokenize

text = [stemmer.stem(word) for word in text if word not in stop_words] # Remove stopwords and stem

return ' '.join(text) # Rejoin into cleaned string

# Apply the cleaning function to all articles

df['clean_content'] = df['content'].apply(clean_text)

📊 Step 3: Vectorize the Text

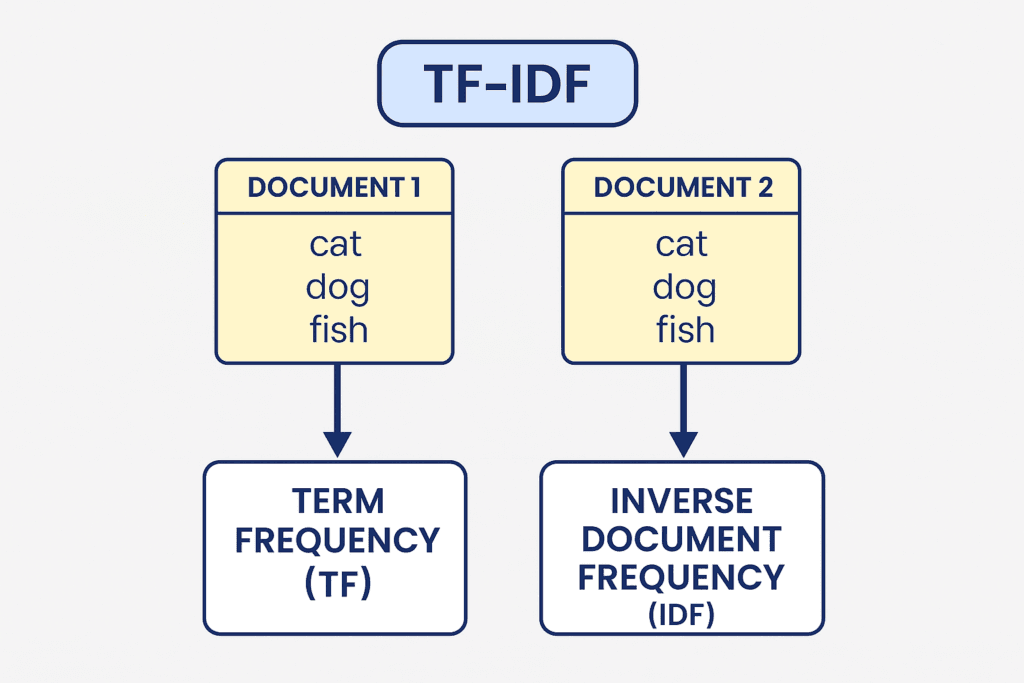

What is TF-IDF (Term Frequency–Inverse Document Frequency)?

TF-IDF is a numerical statistic used to reflect the importance of a word in a document relative to a collection of documents (corpus). It’s a key technique in natural language processing (NLP) for text mining and information retrieval.

- Term Frequency (TF): Measures how frequently a word appears in a document. Higher frequency means higher importance—within that document.

- Inverse Document Frequency (IDF): Measures how common or rare a word is across all documents. Rare terms get higher weight, as common words like ‘the’, ‘is’, etc., carry less meaning.

TF-IDF Score = TF * IDF

Why Use TF-IDF in This Project?

In the context of fake news detection, TF-IDF helps:

- Quantify the textual content: We convert raw news articles into numerical vectors that can be processed by machine learning algorithms.

- Highlight important words: It gives more weight to unique words like ‘hoax’, ‘verified’, ‘sources’ over generic ones like ‘the’, ‘and’.

- Enhance model performance: The resulting vectors are sparse and weighted, which makes them ideal for fast and accurate classification using models like Naive Bayes.

So here is the implementation of this process – here we’ll convert the cleaned text data into numerical vectors using TF-IDF (Term Frequency–Inverse Document Frequency).

from sklearn.feature_extraction.text import TfidfVectorizer vectorizer = TfidfVectorizer(max_features=5000) X = vectorizer.fit_transform(df['clean_content']).toarray() # Feature matrix y = df['label'].values # Labels

🧪 Step 4: Train the Model

What Happens in This Step?

After converting the text into TF-IDF vectors, we need to train a machine learning model to distinguish between real and fake news articles based on these numerical features. For this task, we use Multinomial Naive Bayes, a fast and effective classification algorithm especially suited for text data.

Why Are We Using Naive Bayes?

Naive Bayes is particularly effective for text classification tasks like spam detection, sentiment analysis, and fake news detection. Here’s why it’s a great fit for this project:

- Simplicity & Speed: It’s fast to train and easy to implement, making it ideal for beginners and scalable projects.

- Handles High-Dimensional Data: Text data, especially after TF-IDF vectorization, can be very sparse and high-dimensional. Naive Bayes handles this well.

- Probabilistic Interpretation: It predicts the probability of a document being fake or real, which adds interpretability.

- Performs Well on Small Datasets: Even with a modest dataset, Naive Bayes tends to perform surprisingly well for binary classification.

Key Concepts:

- train_test_split(): Splits the dataset into training and testing sets, helping us evaluate how well the model performs on unseen data.

- MultinomialNB(): A Naive Bayes variant ideal for word frequency data like TF-IDF.

- fit(): Trains the model on the training data.

- predict(): Uses the trained model to classify test data.

- accuracy_score(): Evaluates how accurate the predictions were against the actual labels.

This helps ensure the model generalizes well and doesn’t just memorize the training data. We’ll split our data into training and testing sets and use a Naive Bayes classifier.

We’ll split our data into training and testing sets and use a Naive Bayes classifier.

from sklearn.model_selection import train_test_split

from sklearn.naive_bayes import MultinomialNB

from sklearn.metrics import accuracy_score

# Split dataset

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

# Initialize and train model

model = MultinomialNB()

model.fit(X_train, y_train)

# Predict and evaluate

preds = model.predict(X_test)

print(f"Accuracy: {accuracy_score(y_test, preds) * 100:.2f}%")

🌐 Step 5: Build a Flask App

Why Use Flask?

Flask is a lightweight web framework in Python that allows us to quickly convert our machine learning model into a web application. It’s ideal for simple projects like this because:

- It’s minimal and easy to understand

- It supports routing and form submissions

- It integrates well with Python ML models

- It allows rapid testing via the local server (localhost)

What This Step Does:

- Loads the trained model and vectorizer: Using

pickle.load() - Sets up a homepage (

'/') to render an HTML form - Accepts POST requests (

'/predict') from the form - Processes input: Cleans and vectorizes the user’s input text

- Predicts the result using the model and returns either ‘REAL’ or ‘FAKE’ as feedback on the page

This creates a real-time interface to interact with your trained fake news classifier without needing Jupyter Notebook or Python CLI. We’ll create a simple web interface where users can input news content and get real-time predictions.

We’ll create a simple web interface where users can input news content and get real-time predictions.

# app.py

from flask import Flask, request, render_template

import pickle

app = Flask(__name__)

model = pickle.load(open('model.pkl', 'rb'))

vectorizer = pickle.load(open('vectorizer.pkl', 'rb'))

@app.route('/')

def home():

return render_template('index.html')

@app.route('/predict', methods=['POST'])

def predict():

text = request.form['news']

clean = clean_text(text) # Clean the input text

vect = vectorizer.transform([clean]).toarray() # Convert to vector

pred = model.predict(vect)[0] # Predict

return render_template('index.html', prediction='REAL' if pred else 'FAKE')

if __name__ == '__main__':

app.run(debug=True)

🗃️ Save Model and Vectorizer

What is Pickle?

pickle is a Python library used to serialize and deserialize Python objects. In this project, we use it to save our trained machine learning model and TF-IDF vectorizer to disk so they can be reused later without retraining.

Why Use Pickle Here?

- Avoids retraining every time the server restarts

- Enables deployment: the model is loaded into memory in the Flask app

- Faster response: predictions happen instantly since the model is preloaded

Implementation Steps:

Use pickle to save your trained model and vectorizer for reuse in the web app.

import pickle

pickle.dump(model, open('model.pkl', 'wb'))

pickle.dump(vectorizer, open('vectorizer.pkl', 'wb'))

🖥️ index.html (Simple Web UI)

So we have kept this simple, but you can make it really interesting if you will want to play with stylesheets and make this webpage look wonderful.

<!doctype html>

<html>

<head><title>Fake News Detector</title></head>

<body>

<h2>Enter News Content</h2>

<form method="POST" action="/predict">

<textarea name="news" rows="10" cols="80"></textarea><br>

<input type="submit" value="Detect">

</form>

<h3>{{ prediction }}</h3>

</body>

</html>

This basic form lets users input article text and shows the prediction result.

🧪 How to Use This Project

- Run the Flask app with

python app.py - Open your browser and visit

https://localhost:5000 - Enter a news article or snippet of content into the text box

- Click “Detect” to see if the article is predicted as REAL or FAKE

⚠️ Common Areas Where the Project May Fail

- NLTK Stopwords Not Downloaded: Run

nltk.download('stopwords') - Pickle Files Not Found: Ensure

model.pklandvectorizer.pklare present - Input Too Short: Very short inputs may give unpredictable results

- Bias in Dataset: If trained on a biased dataset, predictions may be skewed

- Dependency Errors: Use a

requirements.txtto manage dependencies

So you may want to prepare yourself for some questions from the professors – so here are some points that you can look out for.

📋 Viva Questions You Might Face

- What is the difference between TF and TF-IDF?

- Why did you choose Naive Bayes and not SVM or Random Forest?

- How does stemming affect accuracy?

- What are the limitations of your project?

- Can your model be fooled? How?

- What would you do to improve this model?

- How do you ensure your model isn’t overfitting?

- What is the purpose of

train_test_split()? - Why use Flask and not Django?

- How would you scale this project to serve millions?

💡 Final Thoughts

- Improve accuracy with LSTM or BERT models

- Add URL scraping to auto-fetch article content

- Deploy online via Render, Replit, or Heroku

📈 SEO Keywords to Target

- Fake News Detection Python project

- Machine learning projects for engineering students

- Final year AI project with source code

- How to detect fake news using Python

- Fake news classifier using scikit-learn

Please comment and share this article if you will want a downloadable .zip of this project with code, Flask app, and deployment notes.

Happy coding!

[…] Want to fight fake news – checkout our project here at: https://pixelhowl.com/build-a-fake-news-detector-with-python-complete-code-dataset-deployment-guide/ […]